The main way of horizontal scaling of Smarty is in cluster mode - the use of server-distributed instances and the distribution of requests between them on the balancer.

It is a good practice to allocate the server role so that each server does only its own task. It is not recommended to use the same server for Smarty, DBMS and balancer.

You can choose any scheme that suits you based on fault tolerance, performance and budget requirements.

Before analyzing cluster configuration schemes, it is important to familiarize yourself with description of Smarty architecture.

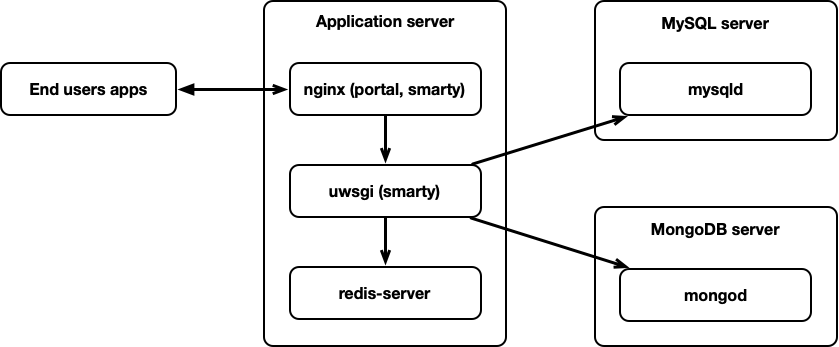

Standard configuration

In the standard configuration, fault tolerance and load balancing are not provided.

In this configuration, nginx with a portal and uwsgi proxy, uWSGI server and Redis server for caching are installed on one server. Subscriber applications communicate with the nginx web server on that server.

On individual servers, SQL Database (for example, MySQL) is installed for storing metadata and MongoBD for storing statistical data.

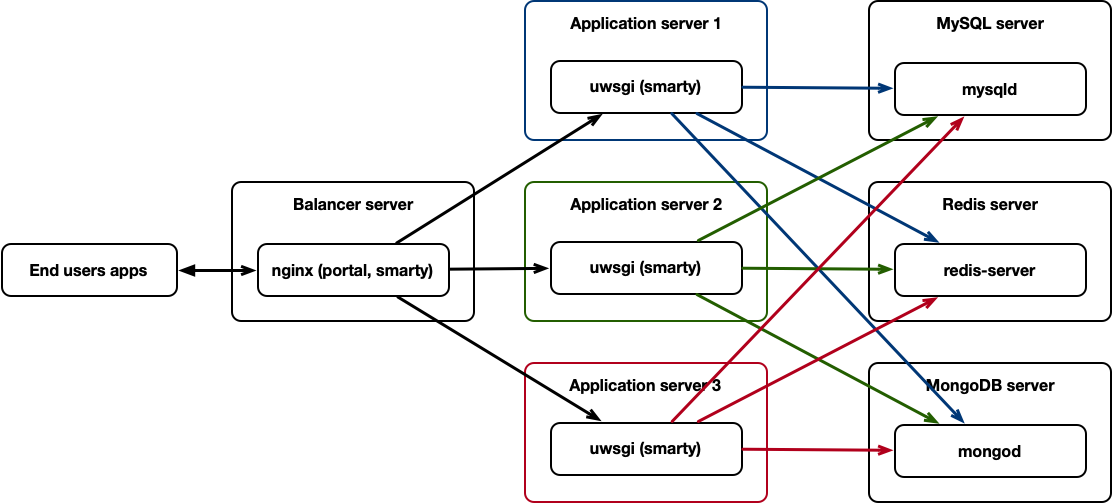

UWSGI load balancing configuration with partial fault tolerance

This option adds a request balancer and additional Smarty servers.

Nginx can act as a balancer using the upstream mechanism and the uwsgi_pass directive. A hardware HTTP request balancer can also be used (in this case, Application servers also need to configure their web server for uwsgi proxy).

nginx on the balancer communicates with uwsgi servers via a tcp socket.

The three Application servers operate autonomously (no specific Smarty settings are required on these servers) and connect to shared SQL, Redis, and MongoDB servers.

Regular crontab commands, such as importing EPG, can only be configured on one of Smarty's servers, and to synchronize media files (channel icons, broadcast covers, movie covers and other downloadable files) between servers, you need to use NFS or any other solution (such as btsync or GlusterFS).

This scheme is partially fault-tolerant due to the fact that the balancer, SQL and Redis are not reserved.

Since MongoDB is not a mandatory service, and its status does not affect the availability of IPTV/OTT service for subscribers, its reservation will be considered only in the most complex configuration.

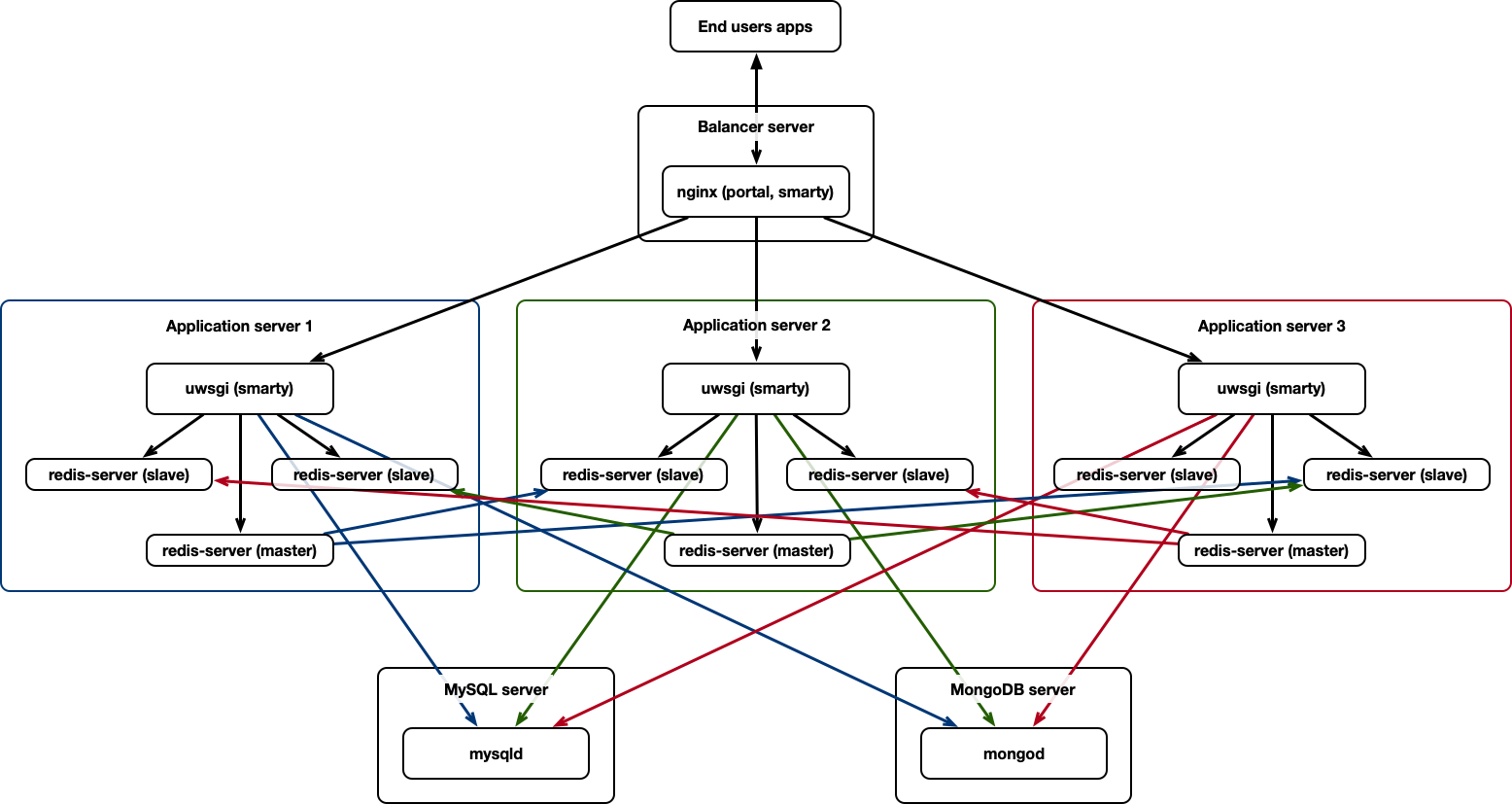

UWSGI + Redis Cluster load balancing configuration with partial fault tolerance

This option repeats the previous one, but Redis Cluster is added and fault tolerance is increased.

Instead of a separate Redis server in this version, we place 3 Redis instances on each server: master and 2 slave so that each master instance is associated with two slave instances on other servers.

In this scheme, the SQL server and the balancer remain unreserved.

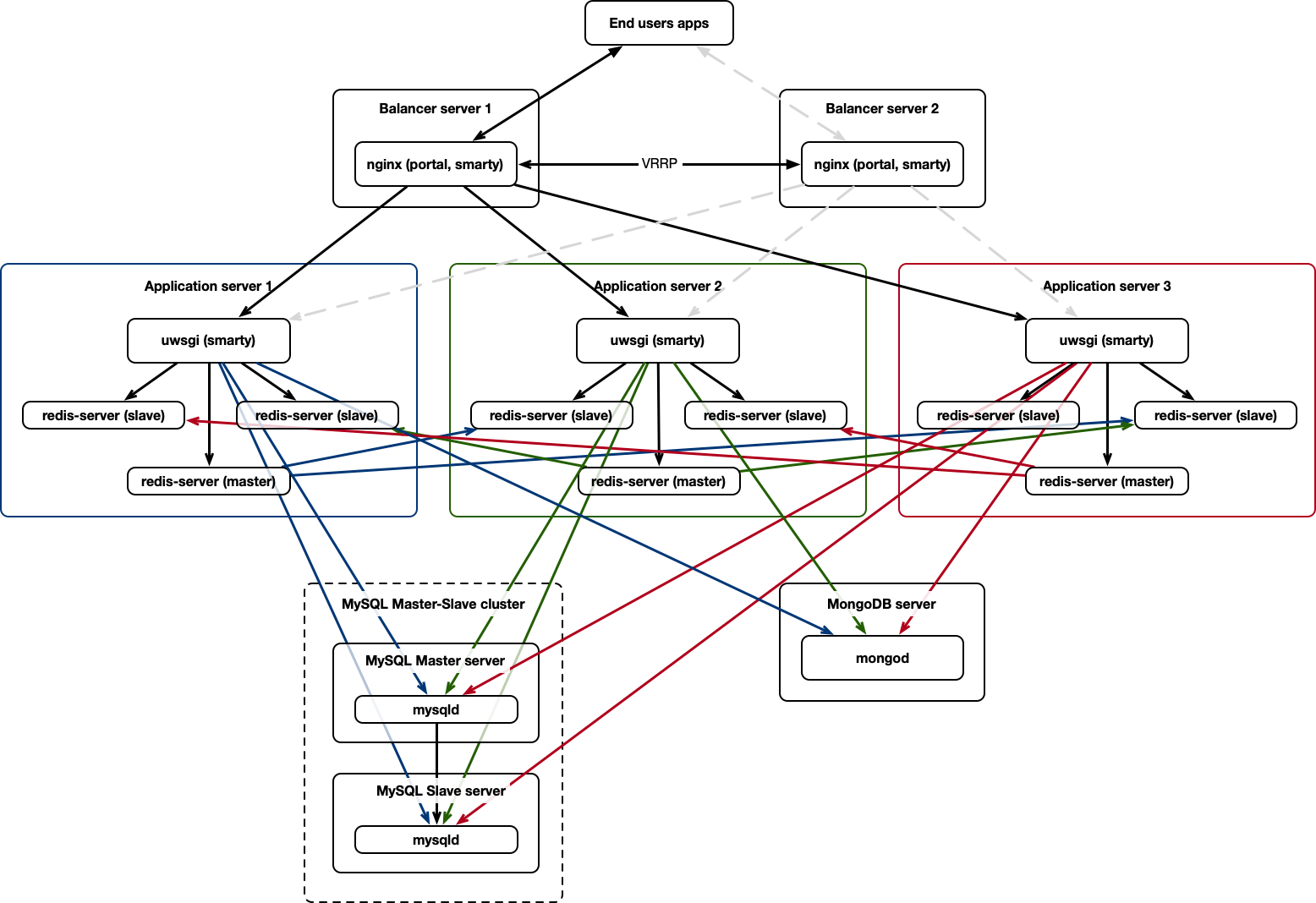

UWSGI + Redis Cluster + SQL Master-Slave Replication with load balancing

In this embodiment, several balancers and basic SQL replication are added.

Two query balancers (or more) must back up each other and have the same floating IP address. This is provided by the VRRP protocol, and can be implemented using the keepalived or similar service. So, if one of the balancers is disabled, its white IP address will automatically rise on the second, and the service will continue to be available.

Replication of the database in Master-Slave mode allows you to distribute the load and have a cold reserve. If the Master Node of the SQL server fails, manual reconfiguration will be required to connect to the new Master Node.

Multi-Master Replication will be discussed further.

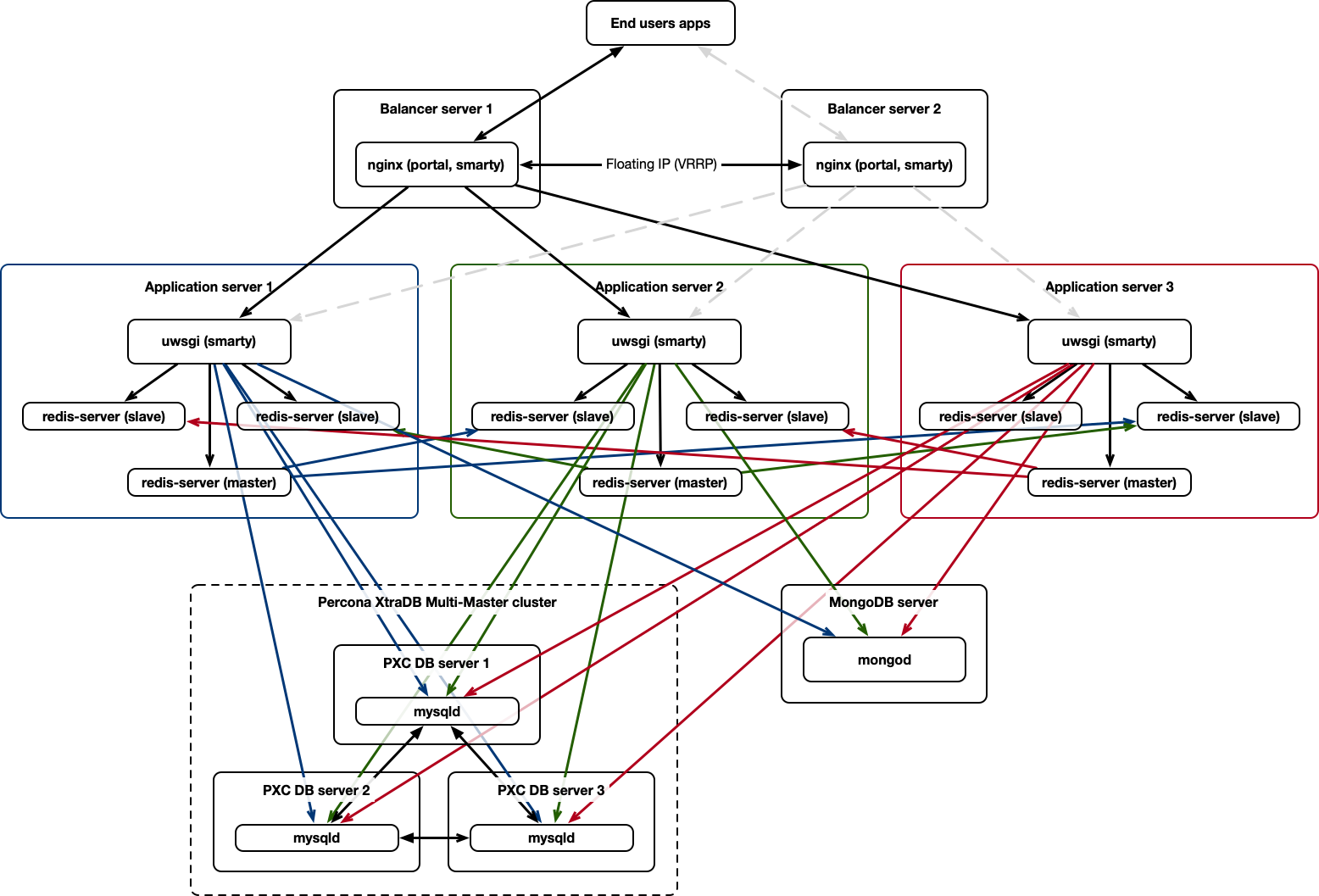

UWSGI + Redis Cluster + SQL Master-Master Replication with load balancing

This configuration variant repeats the previous one, but instead of the basic Master-Slave replication, the Percona Xtra DB Cluster is used here.

Multi-Master replication increases fault tolerance, because in case of disconnection of any of the servers manual intervention is not required to rebuild the cluster.

The arrows on all schemes display direct connections of services to each other, so Smarty connects to each SQL server node directly, indicating its role (Master or Slave).

Instead of Percona, another solution can be used that supports this mode of operation, such as Oracle RAC (however, in this case, Smarty will connect to a single RAC pool and failover will be transparent).

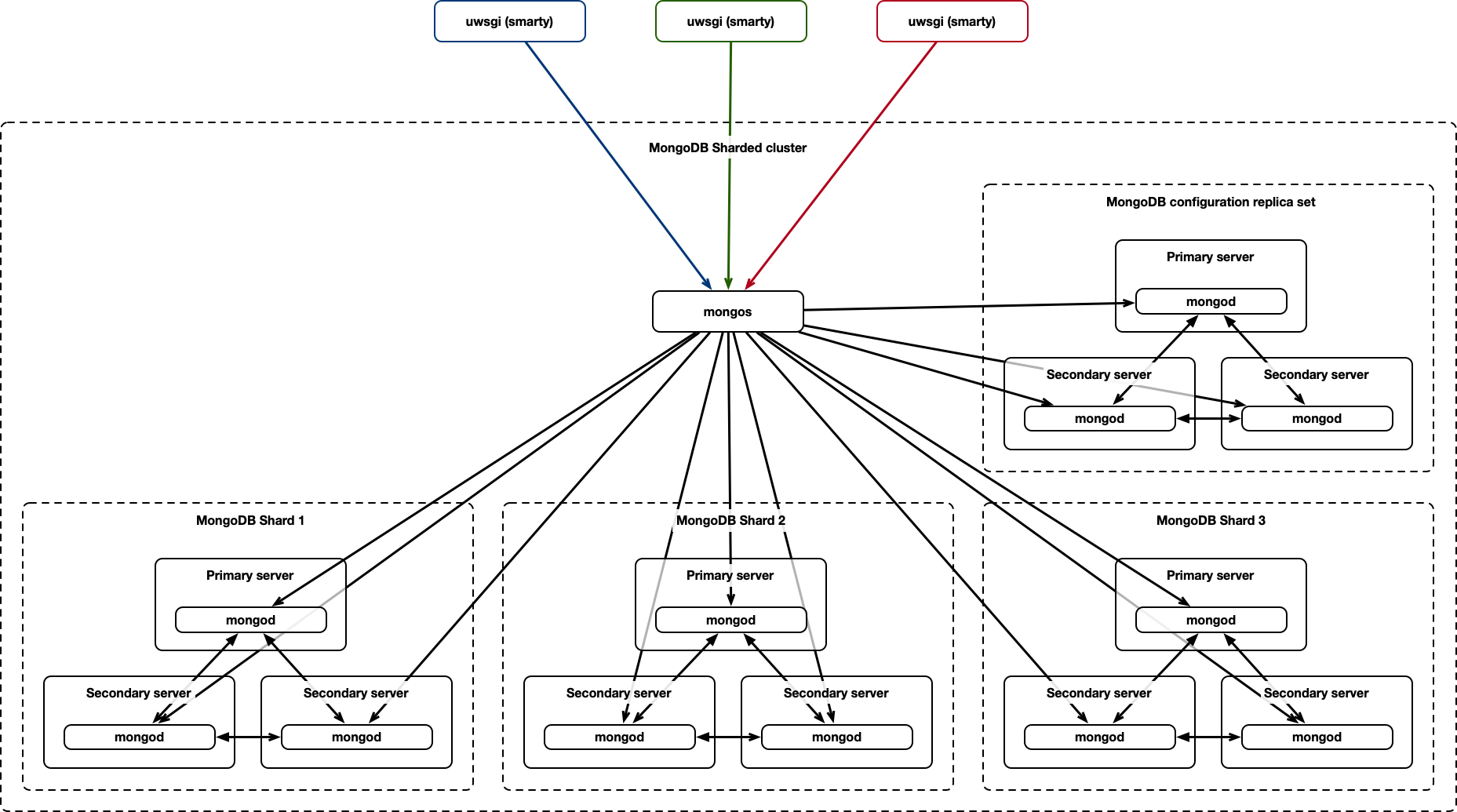

UWSGI + Redis Cluster + SQL Master-Master Replication + MongoDB Sharded Cluster

For a fully fault-tolerant configuration, including the optional MongoDB service, the sharding and replication mechanisms of MongoDB must be used. In this embodiment, the connection will be made through the router mongos, as shown below.

Such a scheme can be necessary with a very large amount of data, a significant number of online users and high technical requirements for the service.

Further scaling

As the next steps to increase system performance, you can:

- Increase the number of instances in clusters.

- Configure Redis Cluster on separate servers.

- Set up web portal hosting on individual servers or in a CDN.

- Configure storage of media files (downloadable channel icons, EPG covers and movies) in CDN.

- Use the built-in mechanism of distributed execution of crontab tasks, taking into account the cluster mode.

- Distribute the roles of Smarty servers into separate clusters (for example, a separate cluster for processing TV viewing statistics, a separate cluster for disabling shared caches, a separate cluster for processing API requests).

- Use an alternative application-level query balancing mechanism.